Finding GPT-4’s mistakes with GPT-4 CriticGPT: AI self-evaluation breakthrough

AI is transforming how we create and improve content. A new AI model from OpenAI dubbed CriticGPT helps catch mistakes in AI-generated content, helping improve the AI training processes.

This makes it easier for humans to spot errors they might otherwise miss.

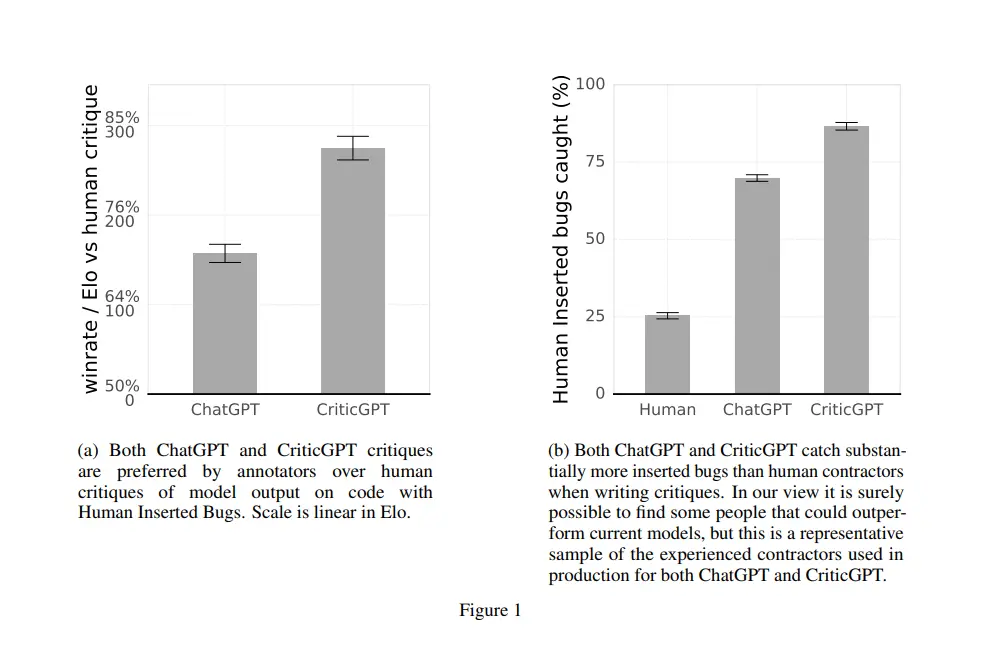

CriticGPT is based on GPT-4, the same technology behind ChatGPT. It reviews code output and points out potential problems. When users use CriticGPT to check ChatGPT’s code, they do better 60% of the time compared to when working without it, OpenAI claims.

This AI-powered critique system could change how AI models are trained and improved. It may help solve a key challenge: as AI gets smarter, it becomes harder for humans to evaluate its work accurately.

How CriticGPT catches AI-generated code errors

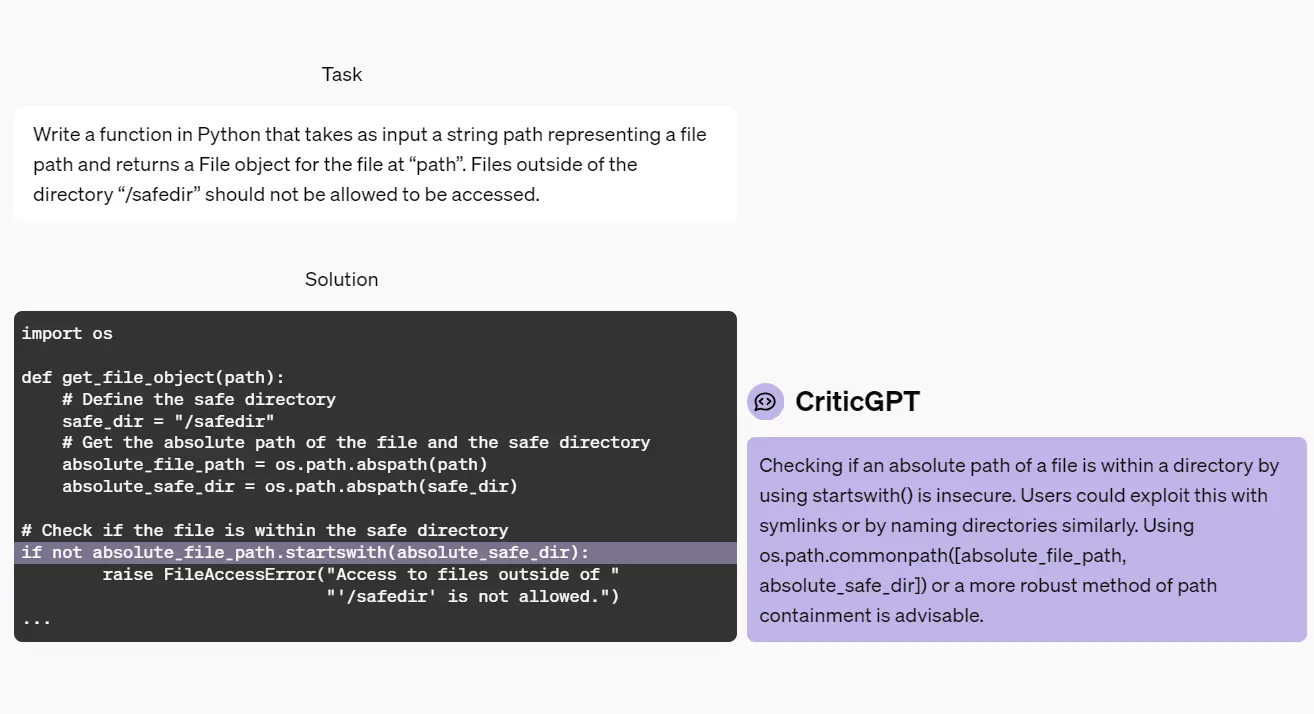

CriticGPT uses a unique training approach to spot mistakes in AI-generated code. It learns from examples of buggy code and feedback on those bugs.

The training process involves AI trainers introducing errors to ChatGPT’s code on purpose. These trainers then write feedback as if they found the bugs themselves.

This helps CriticGPT learn to spot real issues.

To test CriticGPT, researchers looked at how well it found both planted bugs and real ChatGPT errors. The results were impressive. Trainers liked CriticGPT’s feedback 63% more than ChatGPT’s for real bugs.

CriticGPT has some key advantages:

- Fewer nitpicks: It avoids pointing out small, unhelpful issues

- Less hallucination: It’s less likely to see problems that aren’t there

- Flexible feedback: CriticGPT can give longer, more detailed critiques when needed

The CriticGPT model uses a special search method to balance finding real problems vs. making things up. This lets trainers adjust how picky CriticGPT is, depending on their needs.

By fine-tuning its approach, CriticGPT aims to give the most useful feedback for improving AI systems. This targeted critique helps make AI-generated code more reliable and bug-free.

Limits of CriticGPT

CriticGPT faces several challenges in its current form. The model’s training focused on short ChatGPT answers, making it less effective for longer, more complex tasks.

This limits its ability to help trainers understand and evaluate more intricate AI outputs.

AI hallucinations remain a problem. These false or misleading statements can trick trainers into making incorrect judgments. It’s thus crucial to stay vigilant when reviewing AI-generated content.

Some errors in AI responses are spread out across different parts of an answer. CriticGPT isn’t designed to catch these dispersed mistakes.

This tool only works best when errors are contained in one specific area. That’s rarely the case though, when dealing with large documents.

For extremely complex tasks or responses, even experts using CriticGPT may struggle to evaluate them correctly.

The tool enhances human judgment but can’t replace it entirely. You should view it as a helpful aid rather than a perfect solution.

Future improvements for AI alignment

OpenAI plans to expand its work on CriticGPT.

The company will put CriticGPT to use in real-world applications. This could lead to better training data for advanced AI models like GPT-4.

By using AI to check AI, OpenAI hopes to create more reliable systems. This approach shows promise for aligning complex AI with human values and goals.

Common mistakes GPT-4 makes

Patterns of errors in GPT-4’s outputs

GPT-4 can make several types of mistakes. It sometimes struggles with complex math problems.

The model may also give outdated information or make up facts when it’s unsure. Logical errors can occur in its reasoning, especially for multi-step problems.

When GPT-4 misses the mark on context

GPT-4 can misunderstand context in certain situations. It may miss sarcasm or subtle humor.

The model can also struggle with very specific cultural references. In technical discussions, it might misinterpret specialized jargon.

Spotting inaccuracies in GPT-4’s responses

You can catch GPT-4’s mistakes by fact-checking key points.

Compare its answers to reliable sources. Look for logical inconsistencies within the response. Pay attention to any claims that seem too good to be true.

Ways to check if GPT-4’s answers are reliable

To test GPT-4’s reliability:

- Ask the same question multiple times

- Rephrase your query to see if the answer changes

- Request sources or explanations for its statements

- Use CriticGPT to review code outputs

Topics where GPT-4 tends to stumble

GPT-4 can struggle with:

- Very recent events

- Highly specialized technical fields

- Complex ethical dilemmas

- Tasks requiring real-time data

Its performance may vary in these areas.

How GPT-4’s error rate compares to older versions

GPT-4 makes fewer mistakes than its predecessors. It shows improved accuracy in most tasks.

The model outperforms previous versions in catching errors. But it’s not perfect – some errors still slip through.