Llama 3.1 is an open-source AI model from Meta AI that boasts capabilities rivaling top AI models in general knowledge, steerability, math, tool use, and multilingual translation.

It comes in various sizes, including the 405B, 70B, and 8B parameter versions, with the 405B model being the largest and most advanced.

This model is noted for its unmatched flexibility and control, rivaling some of the best closed models available in the market.

Meta’s bold move to release Llama 3.1 as an open-source model allows developers across the globe to innovate further. With a strong performance in various benchmarks, this new AI could very well shape the future of digital assistants.

One of the standout features of Llama 3.1 is its extended context length, which allows it to process up to 128,000 tokens. This enhancement significantly improves its ability to understand and generate text over long passages.

Also, it supports various applications, including text summarization and classification, making it suitable for environments with limited computational resources (AWS).

Wondering how Llama 3.1 stacks up against other top AI models? Keep reading to find out.

Llama 3.1 evaluations

a) Llama 3.1 performance

| Category | Benchmark | # Shots | Metric | Llama 3 8B | Llama 3.1 8B | Llama 3 70B | Llama 3.1 70B | Llama 3.1 405B |

| General | MMLU | 5 | macro_avg/acc_char | 66.7 | 66.7 | 79.5 | 79.3 | 85.2 |

| MMLU-Pro (CoT) | 5 | macro_avg/acc_char | 36.2 | 37.1 | 55.0 | 53.8 | 61.6 | |

| AGIEval English | 3-5 | average/acc_char | 47.1 | 47.8 | 63.0 | 64.6 | 71.6 | |

| CommonSenseQA | 7 | acc_char | 72.6 | 75.0 | 83.8 | 84.1 | 85.8 | |

| Winogrande | 5 | acc_char | – | 60.5 | – | 83.3 | 86.7 | |

| BIG-Bench Hard (CoT) | 3 | average/em | 61.1 | 64.2 | 81.3 | 81.6 | 85.9 | |

| ARC-Challenge | 25 | acc_char | 79.4 | 79.7 | 93.1 | 92.9 | 96.1 | |

| Knowledge reasoning | TriviaQA-Wiki | 5 | em | 78.5 | 77.6 | 89.7 | 89.8 | 91.8 |

| Reading comprehension | SQuAD | 1 | em | 76.4 | 77.0 | 85.6 | 81.8 | 89.3 |

| QuAC (F1) | 1 | f1 | 44.4 | 44.9 | 51.1 | 51.1 | 53.6 | |

| BoolQ | 0 | acc_char | 75.7 | 75.0 | 79.0 | 79.4 | 80.0 | |

| DROP (F1) | 3 | f1 | 58.4 | 59.5 | 79.7 | 79.6 | 84 |

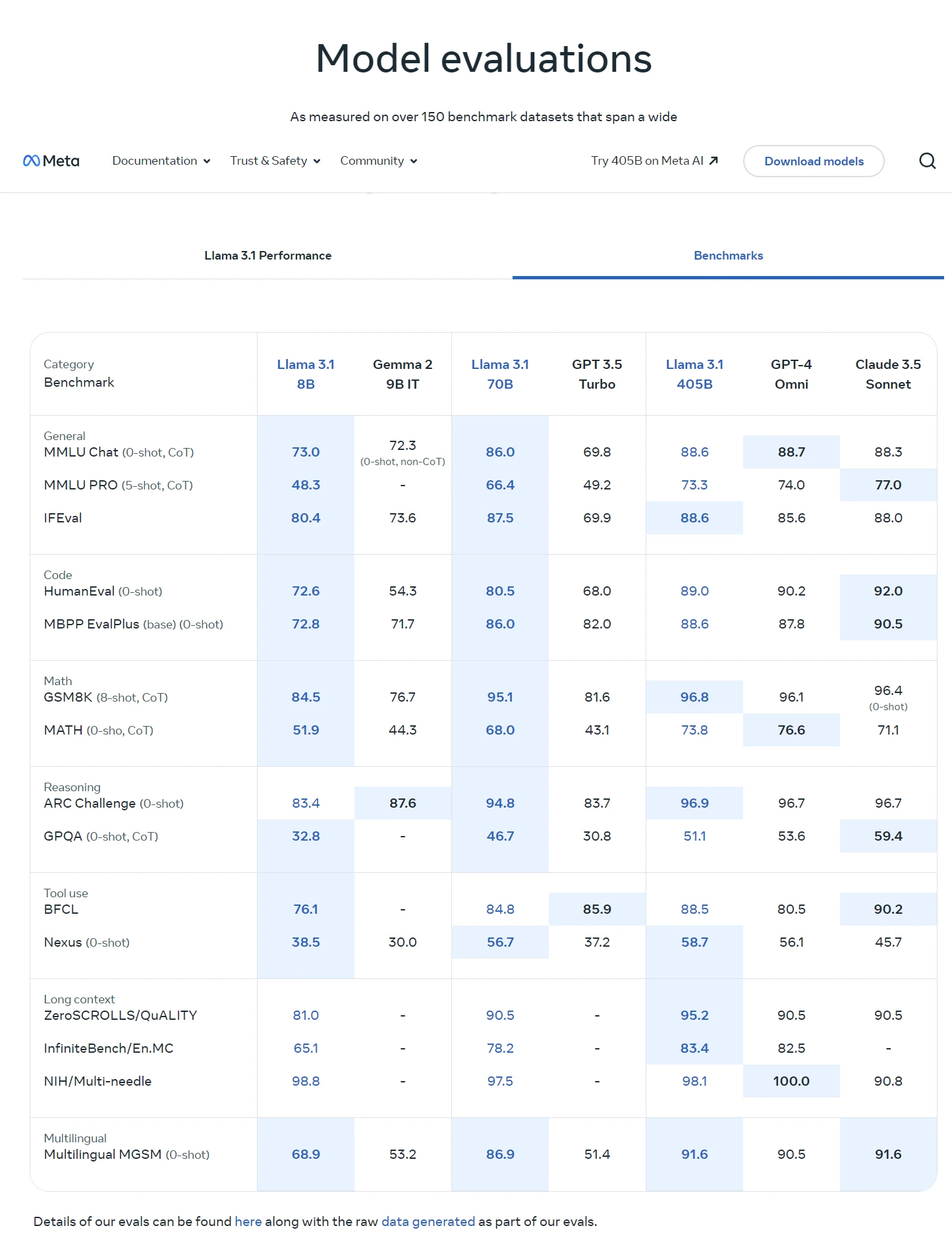

b) Llama 3.1 benchmark across AI models

| Category | Benchmark | Llama 3.1 8B | Gemma 2 9B IT | Llama 3.1 70B | GPT 3.5 Turbo | Llama 3.1 405B | GPT-4 Omni | Claude 3.5 Sonnet |

|---|---|---|---|---|---|---|---|---|

| General | MMLU Chat (0-shot, CoT) | 73.0 | 72.3 (0-shot, non-CoT) | 86.0 | 69.8 | 88.6 | 88.7 | 88.3 |

| MMLU PRO (5-shot, CoT) | 48.3 | – | 66.4 | 49.2 | 73.3 | 74.0 | 77.0 | |

| IFEval | 80.4 | 73.6 | 87.5 | 69.9 | 88.6 | 85.6 | 88.0 | |

| Code | HumanEval (0-shot) | 72.6 | 54.3 | 80.5 | 68.0 | 89.0 | 90.2 | 92.0 |

| MBPP EvalPlus (base) (0-shot) | 72.8 | 71.7 | 86.0 | 82.0 | 88.6 | 87.8 | 90.5 | |

| Math | GSM8K (8-shot, CoT) | 84.5 | 76.7 | 95.1 | 81.6 | 96.8 | 96.1 | 96.4 (0-shot) |

| MATH (0-shot, CoT) | 51.9 | 44.3 | 68.0 | 43.1 | 73.8 | 76.6 | 71.1 | |

| Reasoning | ARC Challenge (0-shot) | 83.4 | 87.6 | 94.8 | 83.7 | 96.9 | 96.7 | 96.7 |

| GPQA (0-shot, CoT) | 32.8 | – | 46.7 | 30.8 | 51.1 | 53.6 | 59.4 | |

| Tool use | BFCL | 76.1 | – | 84.8 | 85.9 | 88.5 | 80.5 | 90.2 |

| Nexus (0-shot) | 38.5 | 30.0 | 56.7 | 37.2 | 58.7 | 56.1 | 45.7 | |

| Long context | ZeroSCROLLS/QUALITY | 81.0 | – | 90.5 | – | 95.2 | 90.5 | 90.5 |

| InfiniteBench/En.MC | 65.1 | – | 78.2 | – | 83.4 | 82.5 | – | |

| NIH/Multi-needle | 98.8 | – | 97.5 | – | 98.1 | 100.0 | 90.8 | |

| Multilingual | Multilingual MGSM (0-shot) | 68.9 | 53.2 | 86.9 | 51.4 | 91.6 | 90.5 | 91.6 |

c) Llama 3.1 Multilingual benchmarks

| Language | Llama 3.1 8B Instruct | Llama 3.1 70B Instruct | Llama 3.1 405B Instruct |

|---|---|---|---|

| Portuguese | 62.12 | 80.13 | 84.95 |

| Spanish | 62.45 | 80.05 | 85.08 |

| Italian | 61.63 | 80.4 | 85.04 |

| German | 60.59 | 79.27 | 84.36 |

| French | 62.34 | 79.82 | 84.66 |

| Hindi | 50.88 | 74.52 | 80.31 |

| Thai | 50.32 | 72.95 | 78.21 |

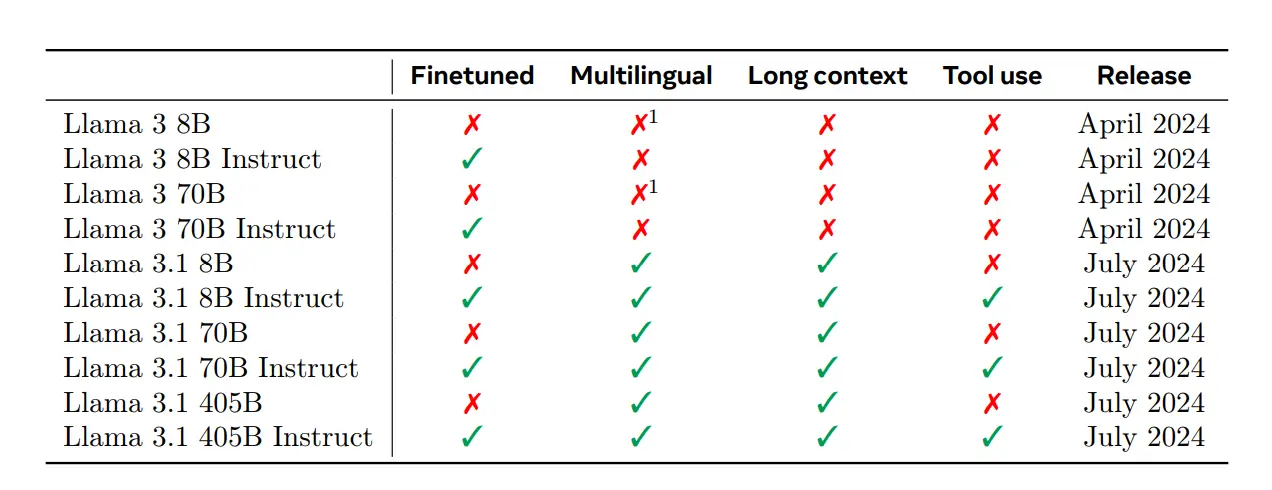

Llama models capabilities:

Evolution and features

Llama 3.1 represents a significant leap from previous versions.

Models are available in 8B, 70B, and 405B parameters, and they are designed to handle extensive datasets and complex tasks.

The 405B variant, as the largest, was trained on over 15 trillion tokens, utilizing more than 16,000 GPUs to ensure rapid and efficient processing.

This advancement enables it to manage intricate language tasks and massive volumes of data seamlessly.

Improvements include a more efficient tokenizer, reducing the number of tokens required by up to 15% compared to Llama 2.

Additionally, the introduction of Group Query Attention (GQA) offers enhanced performance for specific model variants.

These features contribute to its ability to deliver high-precision results, making Llama 3.1 ideal for enterprise-level applications and research projects.

Multilinguality

This new AI model supports a total of 8 languages:

- English

- French

- German

- Hindi

- Italian

- Portuguese

- Spanish and

- Thai.

Llama may be able to output text in other languages than those that meet performance thresholds for safety and helpfulness.

System requirements

Running Llama 3.1 requires robust infrastructure.

Especially for the 405B model, extensive computational resources are necessary.

The model’s training leveraged over 16,000 H100 GPUs, highlighting the need for powerful hardware setups.

For deployment, cloud platforms like Amazon Bedrock offer scalable solutions, allowing you to utilize the model without investing in costly infrastructure.

Ensuring sufficient memory and processing power is crucial to fully leverage the capabilities of Llama 3.1, whether for fine-tuning or deployment.

Choose environments that support high parallelism and provide the needed capacity to handle its demanding computational requirements.

Running Llama 3.1 locally

Setting up Llama 3.1 involves ensuring your system is compatible and following a straightforward process to configure the software correctly.

Compatibility checks

First, split the text up into at most two sentences per paragraph.

First, check if your system meets the basic requirements for installing Llama 3.1.

You need a compatible operating system such as Windows, Linux, or macOS.

Ensure you have the necessary hardware, including a graphics processing unit (GPU) that supports hardware acceleration for better performance. Specifically, NVIDIA GPUs with CUDA support are recommended.

Additionally, ensure you have enough storage space and memory.

The 8B model requires less space, while the 405B model needs significantly more. At least 128 GB of RAM is suggested for the largest models.

Confirm that your system has Python 3.8 or later installed, along with essential libraries like PyTorch.

Setup and configuration

Begin by downloading the desired Llama 3.1 model variant from the official Llama website.

Choose between 8B, 70B, and 405B models based on your needs and available resources.

After downloading, install the model using package managers like pip for Python dependencies.

To set up Llama 3.1, follow detailed guides provided by Meta.

These guides cover various hosting options, including AWS, Kaggle, and Vertex AI. Choose the one that best suits your deployment environment.

During installation, configure the environment variables and paths as directed.

Run verification tests after installation to ensure everything is set up correctly.

This might involve running sample scripts or benchmarks.

For a local installation, you can follow a step-by-step video tutorial that guides you through testing and troubleshooting common issues.

Proper setup and configuration ensure optimal performance and usability of Llama 3.1 models.

Supported formats

Llama 3.1 supports a variety of formats that expand its functionality.

These include multilinguality, enabling it to understand and generate content in different languages.

It also supports coding languages, making it a valuable tool for developers aiming to improve code quality and efficiency through automated assistance.

Additionally, Llama 3.1 is adept at reasoning and tool usage, making it versatile across various applications.

This flexibility ensures that you can tailor its use-case to fit specific needs, whether that be in research, content generation, or technical problem-solving.

Refinements in post-training processes have further aided in improving the alignment of responses and the model’s ability to handle complex tasks seamlessly.

Key achievements include boosting response alignment and lowering false refusal rates, enhancing reliability and efficiency.

Llama 3.1 FAQs

What improvements have been implemented in Llama 3.1 compared to its predecessor?

Llama 3.1 introduces a tokenizer with a vocabulary of 128K tokens, encoding language much more efficiently.

Inference efficiency has been improved through grouped query attention (GQA) available in both the 8B and 70B sizes.

These enhancements make it the most capable version to date.

Can Llama 3.1 be used for commercial purposes?

Yes, Llama 3.1 can be used commercially.

If you are uncertain about whether your use is permitted under the Llama 2 Community License, you can consider bespoke licensing requests.

For more detailed information, visit Meta Llama FAQs.

How does Llama 3.1 performance measure against current AI language models?

Llama 3.1 stands out for its efficient language encoding and improved inference capabilities.

Compared to Llama 2 and other models, it offers better performance in tasks like reasoning, code generation, and instruction.

In what ways can developers integrate Llama 3.1 into their existing applications?

Developers can integrate Llama 3.1 using its refined post-training processes, which enhance response alignment and diversity.

The model is designed to handle multi-step tasks effortlessly, making it suitable for a range of applications.

Step-by-step guidance can be found on Llama 3.1 | Model Cards and Prompt formats.

What set of features distinguishes Llama 3.1 from other AI tools on the market?

Llama 3.1 boasts enhanced scalability and performance, grouped query attention (GQA), and a unique tokenizer.

These features lower false refusal rates and improve overall response quality. It also includes capabilities such as reasoning and code generation.

The Llama 3.1 models from Meta include three distinct versions: 8B, 70B, and 405B, each designed for different applications and computational capabilities.

Llama 3.1 405B

The Llama 3.1 405B model is the largest publicly available language model, featuring 405 billion parameters. It is optimized for enterprise-level applications and research, excelling in tasks such as synthetic data generation, multilingual translation, and complex reasoning. The model supports a context length of 128K tokens, significantly enhancing its ability to handle long-form content and intricate dialogues. Its capabilities make it suitable for advanced applications, including coding, math, and decision-making tasks, positioning it as a leading model in the AI landscape[1][2][3].

Llama 3.1 70B

The Llama 3.1 70B model, with 70 billion parameters, is tailored for large-scale AI applications and content creation. It is particularly effective in text summarization, sentiment analysis, and dialogue systems. Like the 405B version, it also supports a context length of 128K tokens, allowing for improved performance in tasks that require nuanced understanding and language generation. This model is ideal for enterprises looking to implement conversational AI and language understanding capabilities[1][2][4].

Llama 3.1 8B

The Llama 3.1 8B model is designed for environments with limited computational resources, featuring 8 billion parameters. It is best suited for applications that require low-latency inferencing, such as text classification and language translation. Despite its smaller size, it still supports a context length of 128K tokens, making it versatile for various generative AI tasks while being more accessible for developers with less powerful hardware[1][2][3].

All three models leverage advanced transformer architectures and are capable of handling multilingual tasks across eight languages, enhancing their utility in diverse applications[2][3].