In the ever-evolving landscape of artificial intelligence, OpenAI has remained at the forefront, pushing the boundaries with their generative pre-trained transformer models.

The recent DevDay announcements have sparked excitement across the tech community, unveiling the Gpt-4 Turbo, a model that promises to revolutionize how we interact with AI.

OpenAI DevDay takeaways:

- Gpt-4 Turbo’s context window: Capable of engaging with the equivalent of 300 pages of text.

- Improved efficiency: Optimized performance makes AI integration more economical.

- Visual processing: Embraces images as inputs, expanding the horizon of AI applications.

- Developer access: Available to all with a paid OpenAI subscription, catalyzing innovation.

- Ethical foresight: OpenAI’s Copyright Shield initiative reflects a deep-rooted commitment to responsible AI deployment.

Sadly, unlike the Gpt-3.5 turbo iteration, the GPT-4 turbo doesn’t come with increased speed, yet. Users can expect to see this improvement in the coming months.

Overview of DevDay announcements

OpenAI’s DevDay which took place on 6th, November 2023, served as a showcase for OpenAI’s commitment to innovation, where Gpt-4 Turbo took center stage, flaunting its advanced capabilities and setting a new standard for language models.

Main highlights:

- Expansion of context length in GPT-4 Turbo now encompasses 128K tokens

- GPT-4 Turbo enhances long-term memory recall, enabling a broader scope of chronological data retrieval.

- Introduction of four innovative APIs: the creative DALL.E-3, visionary GPT-4-vision, articulate TTS (text-to-speech synthesis), and the perceptive Whisper V3 (speech recognition).

- The intellectual leap from GPT-4 to GPT-4 Turbo showcases superior cognitive capabilities.

- Economic advantage with GPT-4 Turbo: pricing is approximately one-third of GPT-4’s cost, making both input and output tokens more budget-friendly by 3× and 2×, respectively. The preview release is now accessible to all developers.

- GPT-4 Turbo advances JSON interaction and function execution, offering refined command and control options.

- Rate limits have been increased twofold, along with the provision for developers to request higher limits in their account settings.

- Incorporation of the latest knowledge up to April 2023 with built-in retrieval-augmented generation (RAG) technology.

- Commitment to open-source development with the inclusion of Whisper V3 in the API collection.

- The launch of the Copyright Shield initiative, guaranteeing legal expense coverage for copyright disputes.

- Provision for personalized AI creation, allowing for the development of custom “GPTs”.

- The rollout of the Assistants API, complemented by pioneering tools such as Retrieval and the Code Interpreter.

Key features of Gpt-4 Turbo

a) Enhanced contextual understanding

Gpt-4 Turbo introduces a staggering 128,000-token context window, roughly the equivalent of 300 pages of text.

This significant enhancement means that Gpt-4 Turbo can maintain coherent and relevant conversations over longer interactions, outperforming its predecessors by a wide margin.

What now comes closer is Claude AI, with support for up to 100K tokens.

b) Improved performance and efficiency

Not only is Gpt-4 Turbo more contextually aware, but it also brings to the table a remarkable improvement in efficiency.

Optimized performance means that developers can now access AI capabilities that were previously cost-prohibitive, breaking new ground in terms of affordability and computational thriftiness.

c) Support for multimodal inputs

Breaking the barriers of text, Gpt-4 Turbo now extends its tentacles into the realm of the visual.

With the capacity to process images as inputs, it lays the foundation for a multitude of applications, from aiding the visually impaired to streamlining complex data analysis.

Developers can tinker with GPT-4V to create exceptional multimodal tools.

d) Fine-tuning and custom models

Gpt-4 fine-tuning is an experimental venture that promises more targeted improvements, whereas the Custom Models program presents an opportunity for organizations to create tailor-made AI, fine-tuned to their vast proprietary datasets.

So far, the fine-tuning feature has been only available to Gpt-3.5 models

e) Assistants API: a new era of AI applications

The Assistants API marks a leap towards more dynamic AI experiences within applications, offering tools like Code Interpreter and Retrieval to developers, simplifying the integration of complex functionalities into their AI applications.

Code Interpreter is designed to run Python code in a sandboxed environment, while the Retrieval feature enriches the assistant with an expanded knowledge base, pulling from a variety of data sources.

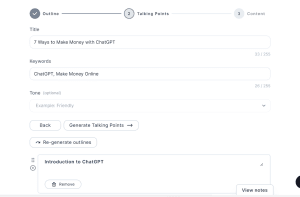

f) Custom GPTs

GPTs, or customized versions of ChatGPT, democratize the power of AI, allowing even those without technical expertise to tailor-make AI solutions.

Whether it’s for personal use, corporate innovation, or entrepreneurial ventures, GPTs provide the canvas for limitless creativity.

Gpts Store is also expected to go live later this month, allowing you to share your custom bot, and even earn a revenue share if it sees massive usage from the GPT community.

Pricing and accessibility

Detailed pricing breakdown

Gpt-4 Turbo introduces a new pricing structure designed to make these advanced AI capabilities more accessible.

| Model | Input Token Cost | Output Token Cost |

| Gpt-4 Turbo | $0.01 | $0.03 |

| GPT-3.5 Turbo | $0.001 | $0.002 |

| DALL·E 3 | N/A | $0.04 per image |

| Text-to-Speech | $0.015 per 1,000 characters | N/A |

Accessing these new features and models

All developers with a paid subscription can now access Gpt-4 Turbo by parsing the appropriate parameters in the API, democratizing access to cutting-edge AI.

The model gpt-4-turbo does not exist?

If you are looking to set Gpt-4 Turbo in your API-based application or use it in the Playground, you will not see the name gpt-4-turbo, unlike the case with gpt-3.5-turbo.

This might be because it’s still in the preview mode, or just that folks over at OpenAI decided to change how they name their models.

That said, here’s how to use GPT-4 turbo inside the OpenAI dashboard: Select Gpt-4-1106-preview to use the latest model from OpenAI.

Comparing Gpt-4 Turbo to previous models

Gpt-4 Turbo vs. Gpt-4

Gpt-4 Turbo surges ahead of Gpt-4 with its expanded knowledge base, up to April 2023, and a larger context window, facilitating a more nuanced and comprehensive understanding of complex queries.

Gpt-4 Turbo vs. Gpt-3.5 Turbo

When pitted against GPT-3.5 Turbo, Gpt-4 Turbo shines with its ability to process image inputs, a feature that its predecessor lacks, along with more efficient token pricing and a broader scope of application.

How Gpt-4 Turbo is shaping the future

Impact on various industries

The implications of Gpt-4 Turbo are vast, touching every industry from healthcare, where it can assist with medical diagnoses, to education, enabling personalized learning experiences.

Potential for innovation and creativity

Gpt-4 Turbo’s advanced capabilities open up new avenues for innovation and creativity, allowing for the generation of complex designs, sophisticated data analysis, and even the potential for AI-assisted art and storytelling.

Addressing copyright concerns

With great power comes great responsibility.

With this update, OpenAI introduces the Copyright Shield initiative, offering legal protection to users against copyright infringement claims, underscoring the company’s commitment to responsible AI usage.

Getting started with Gpt-4 Turbo

Developers can dive into Gpt-4 Turbo by following a streamlined process, leveraging the comprehensive documentation and resources provided by OpenAI to integrate these AI capabilities into their applications.

The road ahead for Gpt-4 Turbo and AI tech

The release of Gpt-4 Turbo is not just an incremental update; it’s a transformative step in AI that will ripple across the tech landscape.

As we embark on this new chapter, the potential of AI to reshape industries, creativity, and daily life has never been more palpable.

Even more grounding features are expected to come to Chatgpt in the most anticipated GPT-5 update.