Project Astra builds on the Gemini models and represents the next step in AI assistant development.

This innovative project focuses on creating an AI that can process multiple types of information at once, understand the specific context of a situation, and engage in natural conversations.

One of the most exciting aspects of Project Astra is its versatility.

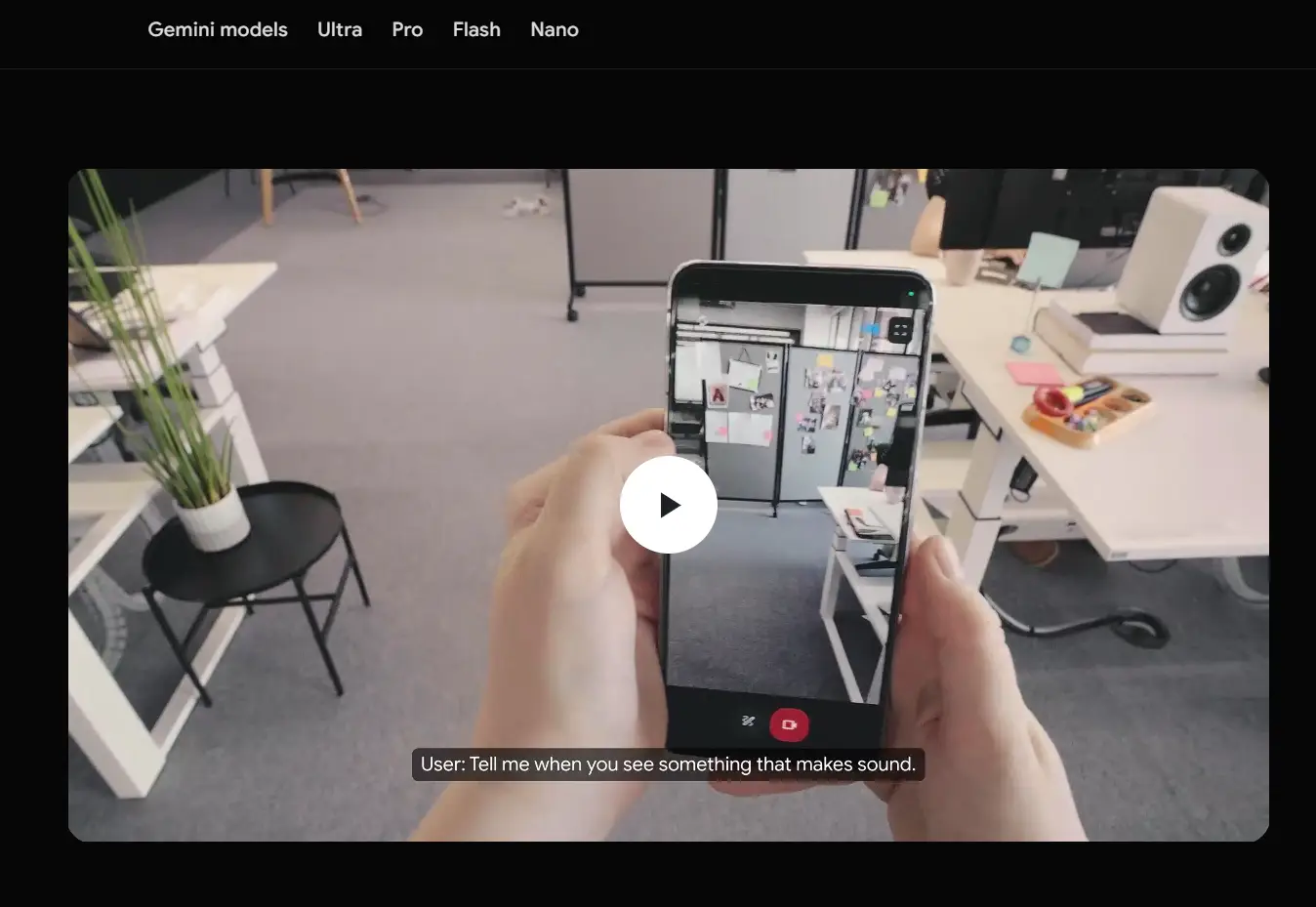

At a recent Google I/O keynote, they demonstrated the prototype functioning seamlessly on both a Google Pixel phone and a prototype glasses device.

This universal AI aims to aid us in everyday life, making technology more intuitive and accessible.

Testing Project Astra

Project Astra has impressive skills. During the Google I/O keynote, it displayed various abilities in real-time.

- Physics Drawings: Astra could explain physics-related diagrams.

- Race Car Details: It identified different parts of a race car.

- Landmark Drawings: Astra recognized drawings of famous landmarks.

- Sequence Memory: It memorized a sequence of objects.

- Literature Interpretation: Astra interpreted drawings from literary works.

All demos were done using a Google Pixel phone or prototype glasses. Experiencing these capabilities firsthand was quite fascinating.

Behind the scenes

Creating a useful AI assistant involves making it understand and respond to the world just like humans. It needs to see and hear what’s happening to understand the context and act on it.

Google AI agents are proactive, teachable, and personal. They allow natural, lag-free conversations, similar to GPT-4o voice interactions.

By incorporating advanced speech models, Google is enhancing how these agents sound, giving them varied intonations.

These improvements help the AI better understand the context it’s used in and respond promptly during conversations.

By developing multimodal AI that integrates vision, voice, and text, Google AI seeks to equip their AI assistants to function seamlessly across different devices like smart glasses and phone cameras, creating a more dynamic user experience.

What’s next

With Project Astra, I can imagine having an expert AI assistant everywhere. This could be accessed through a phone or glasses.

Some features will soon be included in Google products like the Gemini app and on the web. Android users in the U.S. will see these updates first, according to Sundar Pichai and Demis Hassabis.

Highlights

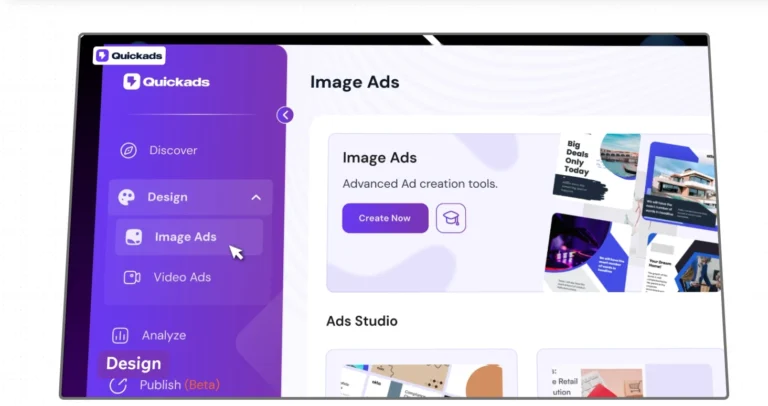

- Gemini 1.5 Pro: Enhanced models for speed and efficiency

- Project Astra: Vision for the future of AI assistants

- Gemma 2: Upcoming advances in AI models