Chain of Thought (CoT) reasoning is a technique that helps AI systems solve complex problems by breaking them down into smaller, logical steps. This approach mimics human thinking patterns and improves the accuracy of large language models when tackling difficult tasks.

It’s what makes newer AI systems seem more logical and thoughtful in their responses.

When you ask an AI a tricky question, older models might jump straight to an answer. But with chain of thought prompting, the AI shows its work, explaining each step of its reasoning process.

This makes the answers more trustworthy and helps you understand how the AI reached its conclusion.

The real power of chain of thought reasoning is that it dramatically improves AI performance on tasks requiring math, logic, and common sense. By simulating human-like reasoning processes, these systems can now tackle problems that once seemed impossible for computers. And the best part? You don’t need to be a tech expert to take advantage of this capability in your everyday interactions with AI.

Key concepts

Chain of Thought reasoning works by creating a series of connected logical steps that lead to a final answer. This process makes problem-solving more transparent and reliable.

The technique relies on three main principles:

- Step-by-step processing: Breaking down complex problems into smaller, manageable parts

- Explicit reasoning: Showing the thinking process rather than just providing answers

- Logical connections: Creating clear links between different steps of reasoning

CoT helps AI systems handle math problems, logical puzzles, and other tasks that require deep thinking. Without this approach, models often make errors when faced with complex questions that need multiple steps to solve.

Chain of thought prompting can be combined with few-shot learning to achieve even better results on challenging problems.

Origins in AI research

Chain of Thought reasoning emerged from research at Google Brain and other AI labs around 2022. Scientists noticed that large language models struggled with complex reasoning tasks despite their impressive language abilities.

The breakthrough came when researchers discovered that asking models to “think step by step” dramatically improved performance on math and logic problems.

This simple prompt unlocked reasoning capabilities that were previously hidden.

Early experiments showed that CoT mirrors human reasoning by facilitating systematic problem-solving through logical deductions. The approach proved particularly effective with larger models like GPT-4, which have sufficient parameters to maintain coherent reasoning chains.

The technique gained popularity quickly because it required no model retraining — just clever prompting strategies. This made it accessible to developers working with existing AI systems.

Distinguishing features

Chain of Thought reasoning stands out from other AI techniques in several important ways. First, it creates transparent decision trails that users can follow and verify.

This addresses the “black box” problem that plagues many AI systems.

Second, CoT enables complex reasoning capabilities that were previously difficult to achieve. Tasks like multi-step math problems become manageable when broken into logical sequences.

Third, the technique is remarkably flexible. It works across domains including:

- Mathematical calculations

- Logical deductions

- Planning scenarios

- Common sense reasoning

Unlike some AI approaches that rely on specific datasets, CoT is a prompt engineering strategy that can be applied broadly. You can implement it with various models from companies like OpenAI and Google.

The most powerful feature may be how CoT makes AI reasoning more human-like and interpretable, allowing people to better understand and trust AI conclusions.

Mechanisms of Chain of Thought in AI

Step-by-step reasoning processes

The core mechanism of Chain of Thought reasoning involves generating intermediate steps between the initial question and final answer. Unlike black-box approaches, CoT makes the AI’s thinking visible.

Here’s how it works:

- Problem identification – The AI recognizes the task requirements

- Decomposition – Breaking the problem into smaller, manageable parts

- Sequential thinking – Processing each step in logical order

- Verification – Checking intermediate conclusions for accuracy

This approach mimics how humans solve problems by working through each stage deliberately. The AI doesn’t jump directly to answers but builds reasoning pathways.

When implemented effectively, these reasoning chains create a traceable path of logic that both humans and machines can follow and understand.

Prompt design and engineering

Creating effective CoT systems requires careful prompt engineering. The way you phrase questions significantly impacts the quality of the reasoning process.

Structured reasoning approaches in CoT prompting often include:

- Explicit instructions to “think step by step”

- Few-shot examples demonstrating the desired reasoning pattern

- Question decomposition techniques that break problems into parts

- Self-reflection prompts that encourage the AI to evaluate its own reasoning

The most effective prompts provide just enough guidance without over-constraining the AI’s thinking processes. They create a framework that allows flexible reasoning while maintaining logical coherence.

Engineers constantly refine these prompting strategies to improve performance on different types of problems, from mathematical reasoning to ethical decision-making.

Role in complex problem solving

CoT excels at tasks requiring multi-step reasoning that would overwhelm traditional AI approaches. This capability transforms how AI handles complexity.

Language models using CoT can tackle problems in various domains:

- Mathematical reasoning – Breaking down equations step-by-step

- Logical puzzles – Working through scenarios with conditional logic

- Planning tasks – Developing sequences of actions to achieve goals

- Content creation – Structuring arguments with supporting evidence

The transparency of CoT allows you to identify exactly where the reasoning might go wrong. This makes debugging easier and builds trust in the system’s outputs.

For enterprise applications, CoT provides the reasoning audit trails often required in regulated industries or high-stakes decision contexts.

Comparison to traditional approaches

Traditional AI approaches often function as “black boxes” that produce answers without explaining their reasoning. CoT represents a fundamental shift in this paradigm.

Key differences include:

| Traditional AI Approaches | Chain of Thought Approaches |

|---|---|

| Direct input-to-output mapping | Sequential reasoning steps |

| Limited transparency | Visible thinking process |

| Struggles with complexity | Handles multi-step problems |

| Difficult to debug | Easier to identify reasoning errors |

The reasoning capabilities of CoT approaches make them significantly more powerful for tasks requiring logical thinking. You can follow the AI’s logic, identify flaws, and provide targeted corrections.

CoT also addresses the “hallucination” problem common in AI by forcing models to show their work rather than simply asserting conclusions. This makes errors more detectable and correctable.

Applications and limitations of chain of thought reasoning

Chain of thought (CoT) reasoning has transformed how AI systems tackle complex problems.

This approach allows models to break down challenging tasks into manageable steps, improving performance across various domains while still facing important constraints.

Use cases in natural language processing

In natural language processing, chain of thought prompting enhances AI capabilities in several key areas:

Question answering has improved dramatically with CoT. When asked complex questions, AI can now show its reasoning process, making answers more transparent and accurate. This helps users understand how the AI reached its conclusions.

Text summarization benefits from step-by-step reasoning. Instead of producing generic summaries, CoT-enabled models identify key points, analyze relationships between concepts, and create more insightful summaries.

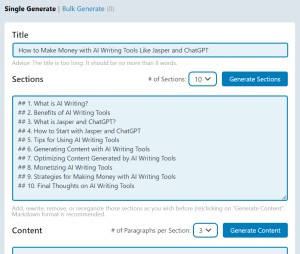

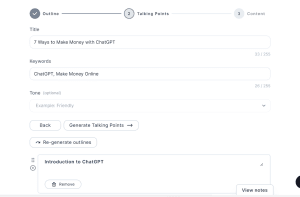

Content creation has become more structured with CoT techniques. Writers and marketers use these systems to develop coherent arguments, plan article structures, and generate creative content with logical flow.

CoT also enhances translation quality by breaking down idiomatic expressions and cultural references that direct translation might miss. This creates more natural-sounding results in the target language.

Performance in mathematical reasoning

Chain of thought prompting represents a significant advancement in AI’s mathematical capabilities:

Problem-solving accuracy: By walking through calculations step-by-step, models reduce computational errors and demonstrate clearer reasoning paths. This makes AI more reliable for complex math tasks.

Multi-step calculations that once confused AI systems are now handled with greater precision. Models can track variables, apply formulas correctly, and maintain logical consistency throughout lengthy problems.

Word problems: AI can translate narrative scenarios into mathematical operations by identifying relevant variables and relationships hidden in text descriptions.

Geometry and spatial reasoning: Models can now break down visual problems into logical components, helping with everything from basic shape calculations to complex proofs.

Chain of Thought Challenges and Constraints

Despite its benefits, chain of thought reasoning faces significant limitations:

Computational demands present a major obstacle. CoT requires substantially more processing power than standard prompting methods, making it resource-intensive and potentially costly for complex applications.

Reliability concerns persist across different problem types. While CoT improves performance overall, it doesn’t guarantee correct results in every case. Models can still make logical errors or follow incorrect reasoning paths.

Scaling challenges emerge with extremely complex problems. As the number of reasoning steps increases, the likelihood of errors compounds, potentially leading to incorrect conclusions.

Domain limitations restrict effectiveness in specialized fields. CoT works best in areas where problems have clear, logical structures and less effectively in domains requiring intuition or specialized knowledge not captured in training data.

Implementation complexity adds another barrier. Creating effective CoT prompts requires skill and experimentation to guide models toward productive reasoning paths rather than irrelevant tangents.

CoT FAQs

How does the chain-of-thought approach enhance reasoning in large language models?

Chain-of-thought (CoT) prompting significantly improves reasoning in large language models by encouraging step-by-step thinking. Instead of jumping straight to answers, models work through problems gradually.

This approach mirrors human reasoning, creating a series of logical deductions that lead to more accurate solutions. The intermediate steps make the reasoning process transparent and easier to follow.

When combined with few-shot prompting, CoT becomes even more powerful. By showing the model several examples of step-by-step reasoning, it learns to apply similar thinking patterns to new problems.

What are some practical applications of chain-of-thought prompting in AI systems?

CoT prompting excels in mathematical problem-solving where breaking down equations helps reach accurate answers. It’s particularly useful for word problems that require multiple calculations.

In logical reasoning tasks, CoT helps AI navigate complex scenarios by considering cause and effect relationships. This is valuable for planning, decision-making, and analyzing hypothetical situations.

CoT also improves complex reasoning capabilities in tasks requiring deep understanding, such as scientific analysis, legal reasoning, and medical diagnostics. By showing its work, the AI provides more trustworthy conclusions.

Can you explain how chain-of-thought reasoning compares to other types of reasoning in AI?

Unlike direct prompting which seeks immediate answers, CoT breaks problems into manageable steps. This methodical approach reduces errors in complex scenarios that require multi-step solutions.

CoT differs from retrieval-based reasoning which primarily relies on finding and applying stored information. Instead, CoT actively works through problems even when faced with unfamiliar scenarios.

Some critics argue that CoT outputs are fabricated after the fact, suggesting the model may determine its answer first, then construct a reasoning path. This remains a topic of ongoing research and debate.

What role does chain-of-thought prompting play in enhancing the abilities of AI models like ChatGPT?

CoT prompting helps ChatGPT tackle complex reasoning tasks that would otherwise exceed its capabilities. By encouraging step-by-step thinking, it reduces errors in multi-stage problems.

This technique makes ChatGPT’s decision-making process more transparent. Users can follow the reasoning path and identify exactly where mistakes might occur, leading to better collaborative problem-solving.

ChatGPT can also learn from example CoT prompts to improve its own reasoning. This structured reasoning approach allows it to handle increasingly complex tasks that require deep analytical thinking.

How is the chain-of-thought concept applied in the development of AI tools such as DeepSeek and LangChain?

DeepSeek incorporates CoT principles to enhance its problem-solving capabilities. By breaking complex tasks into smaller components, it achieves greater accuracy and produces more reliable outputs.

LangChain leverages CoT to create more sophisticated chains of operations. This allows for multi-step reasoning processes that can handle complex workflows and decision trees.

Both tools use CoT to make AI reasoning more transparent and debuggable. Developers can examine the intermediate steps to identify weaknesses and improve model performance over time.

In what ways does the absence of prompting affect chain-of-thought reasoning in AI?

Without explicit CoT prompting, AI models often produce direct answers without showing their work. This reduces transparency and makes it difficult to verify the reasoning process.

The lack of CoT prompting particularly affects performance on complex problems. Models are more likely to make logical errors or jump to incorrect conclusions when not encouraged to think step by step.

Training AI models to automatically use CoT reasoning, even without explicit prompts, remains a significant challenge in enhancing reasoning capabilities. This represents an important frontier in making AI reasoning more robust and human-like.