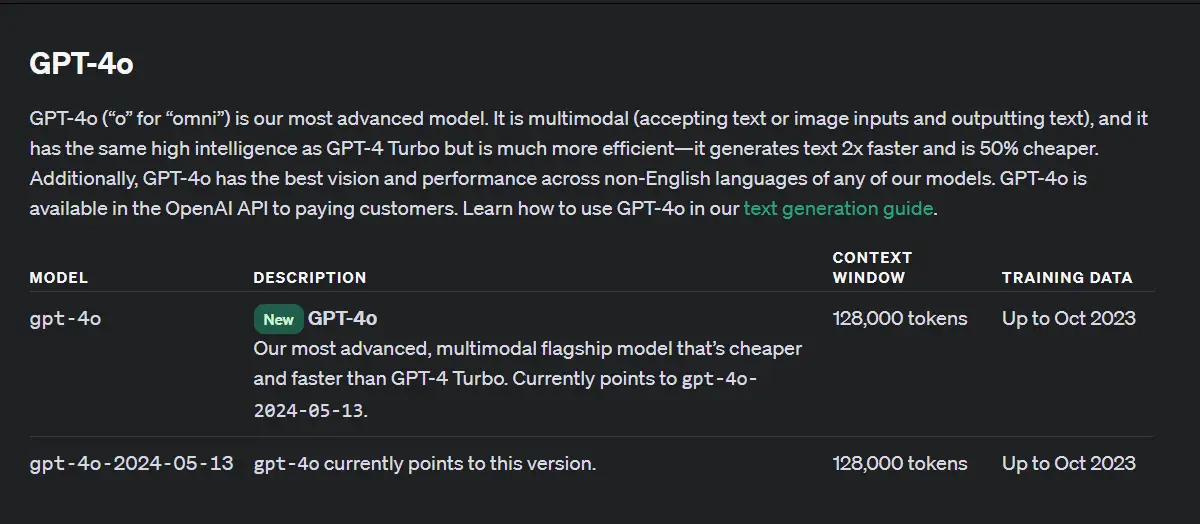

Wondering how to use GPT-4o? If you are familiar with OpenAI’s products, this shouldn’t be an issue.

GPT-4o, launched by OpenAI on May 13, 2024, is an advanced AI model designed for faster responses and more natural-sounding voice capabilities.

When GPT-4o is fully rolled out, you will be able to access it via:

- The ChatGPT interface

- OpenAI API

Here’s a detailed guide on how to utilize GPT-4o effectively:

Availability in the API

OpenAI’s GPT-4o is accessible for users with an API account. It can be integrated into various AI applications through several APIs, including Chat Completions, Assistants, and Batch.

It supports function calling and JSON mode for a versatile AI-powered experience on websites and apps.

- Access Post-Payment: Upon a minimum payment of $5, users can utilize not only GPT-4o but also GPT-4 and GPT-4 Turbo.

- API Models: GPT-4o joins a suite of models offering diverse capabilities for developers.

- Start: Developers can experiment with GPT-4o via the Playground.

- Pricing: The cost of using GPT-4o is detailed on the API pricing page.

Via API

Setting up your environment

Installing necessary libraries:

- Python: Install the OpenAI Python library if you haven’t already. Run the following command in your terminal:

pip install openai- API Configuration: Set your API key in your script to authenticate requests:

import openai

openai.api_key = 'your-api-key'Using GPT-4o for text and voice inputs

Generating text:

- Prompt Design: Craft detailed and specific prompts to get the best responses from GPT-4o.

- API Call for Text:

response = openai.Completion.create(

model="gpt-4o",

prompt="Write a detailed project proposal for an AI startup.",

max_tokens=500

)

print(response.choices[0].text)Voice capabilities:

- Setting Up Voice Interaction: Use the updated APIs to leverage GPT-4o’s enhanced voice features. Here’s an example using a hypothetical library that supports voice:

response = openai.Completion.create(

model="gpt-4o",

prompt="Convert this text to a natural-sounding speech.",

max_tokens=500

)

# Hypothetical library for voice output

play_voice(response.choices[0].text)Utilizing multimodal features

Image and text integration:

- Multimodal Inputs: GPT-4o can process and generate responses based on both text and images. For instance, providing an image alongside a text prompt can yield a more contextual response:

response = openai.Completion.create(

model="gpt-4o",

prompt="Describe the contents of the attached image.",

image="path_to_image.jpg"

)

print(response.choices[0].text)Optimizing API usage

Best practices:

- Rate Limiting: Be aware of API rate limits to avoid throttling. Optimize your API calls by batching requests when possible.

- Fine-Tuning: Leverage fine-tuning capabilities to tailor GPT-4o’s responses to your specific needs. This involves training the model with custom datasets to improve its performance in your domain.

Documentation and Resources:

- Official Docs: Refer to OpenAI’s documentation for comprehensive guides and examples.

- Community Forums: Engage with the developer community through forums and discussions to share insights and solutions.

By following these steps, you can effectively harness the power of GPT-4o for various applications, from generating text to implementing advanced voice interactions and multimodal processing.