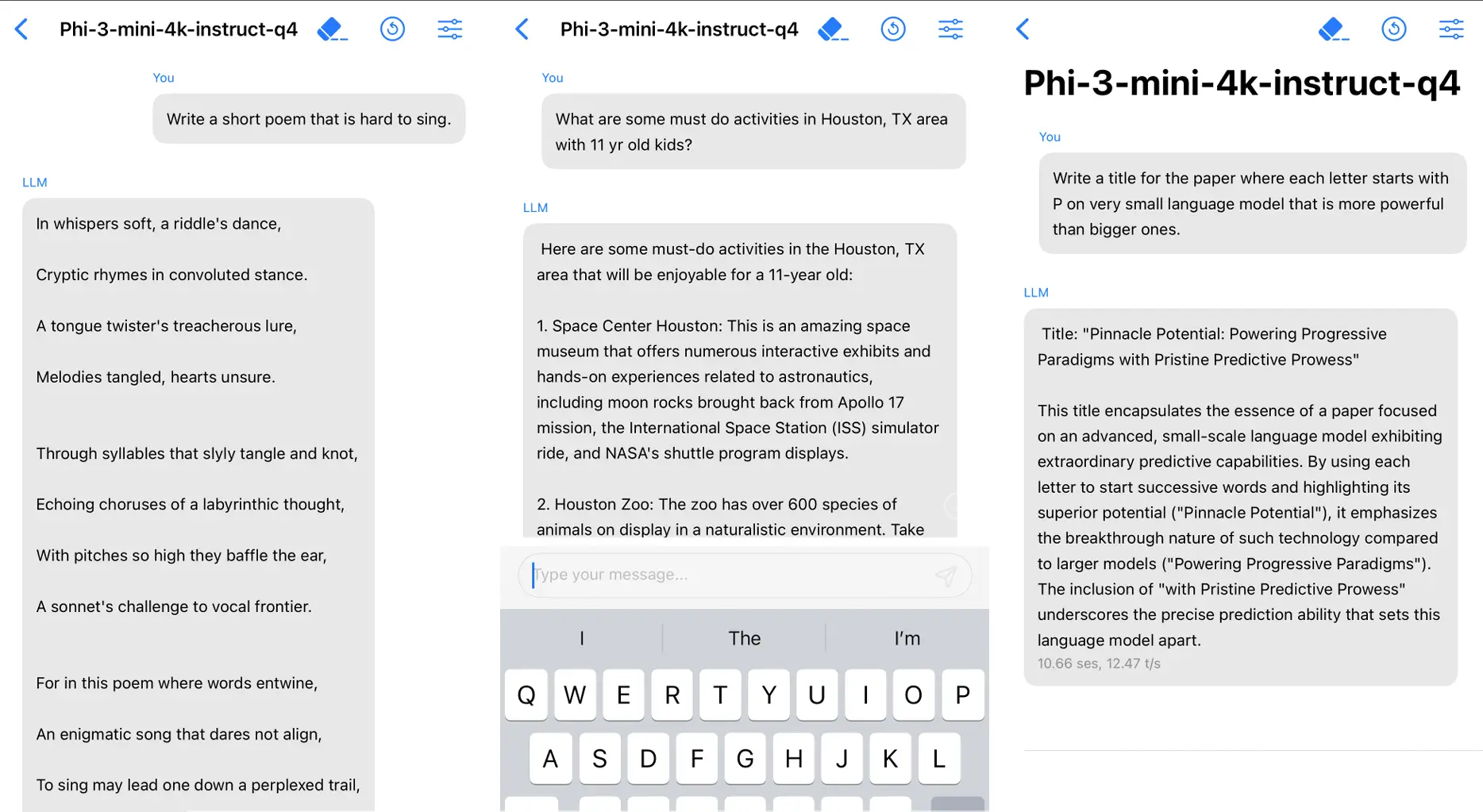

Microsoft has unveiled a breakthrough in machine learning technology: the Phi-3 family of small language models (SLMs).

Unlike their larger counterparts, these streamlined SLMs excel in a range of tasks without the heavyweight infrastructure.

Not only do they match, and in some cases exceed, the performance of larger models, but they are also trained on a more modest dataset, embodying a shift toward efficient yet capable AI tools.

As these models become available to the public, users can harness their power directly from devices such as smartphones, providing a level of convenience and speed previously unattainable.

The flagship model, Phi-3-mini, sets a new standard for performance in a compact size and is now accessible through various platforms.

With this innovation, the deployment of AI in areas with limited connectivity or the need for data privacy is within reach, opening up a world of possibilities for AI application across diverse and challenging environments.

The significance of superior training data

When discussing artificial intelligence (AI), particularly language models, the strength and efficiency of the model often hinge on the quality of the training data utilized.

Smaller language models such as Phi-3-mini, with “only” 3.8 billion parameters, demonstrate that expansive capabilities aren’t solely a function of size.

Unlike their larger predecessors, these models shine brightly despite their smaller stature, and the secret to their success lies in the meticulous selection and use of exemplary training material.

In the landscape of generative AI, size was traditionally equated with power. Extensive models devoured internet data in great volumes to satisfy their learning requirements, which was instrumental in understanding language intricacies and providing intelligent responses. Yet, a different path was charted, emphasizing not the quantity but the quality of data.

Consider the Phi-3-mini’s training approach, which sets a new precedent: starting with a group of 3,000 well-chosen words, a large language model was prompted to weave millions of children’s stories.

This novel data set, termed “TinyStories,” yielded surprisingly coherent and grammatically sound narratives when used to train a compact language model.

The process utilized for “TinyStories” was later expanded and refined for training its sibling, Phi-1, utilizing a more complex method to gather higher educational quality data.

To ensure the excellence of this “CodeTextbook” data set, a rigorous filtration strategy was employed. The AI was tasked with comprehending and reiterating from high-quality, educational sources akin to textbook material, thus simplifying the model’s learning process.

It is not an easy feat to differentiate high-quality content from the immense pool of information available; however, large language models (LLMs) come to the rescue, facilitating the evaluation and curation of substantial data required for training smaller, more focused models. These LLMs play a crucial role in synthetic data generation—a process now integral to the development of impactful AI applications.

However, superior data alone does not encapsulate the entirety of model training. Ethical considerations and safety measures are also paramount. Addressing this, models such as Phi-3 are taken through rigorous testing, red-teaming, and feedback loops to ensure responses are not just smart, but safe and appropriate. This layered approach to development helps establish trust in the applications powered by these models.

For developers utilizing the Phi-3 models, an additional boon is the availability of tools within Azure AI. These resources aid in crafting applications that are not only intelligent but also align with high standards of trustworthiness and safety.

Selecting appropriate language models for specific tasks

When determining the best language model to employ for a specific task, it’s essential to understand the capabilities and limitations of both small and large language models.

Selecting an appropriately sized model can significantly impact the efficiency and outcome of your project.

- Small Language Models (SLMs): Ideal for tasks that require concise answers or content generation, such as:

- Summarizing key points from lengthy documents

- Gleaning trends and insights from industry reports

- Crafting marketing material and social media content

- Operating customer support chatbots for straightforward inquiries

SLMs bring the advantage of lower computational overhead and can often provide swift responses without the need to access cloud-based computing resources.

- Large Language Models (LLMs): More suitable for complex tasks that involve:

- In-depth analysis of extensive datasets

- Complex reasoning and planning across multiple variables

- Sifting through vast scientific literature for drug discovery or similar applications

- Partitioning intricate tasks into manageable subtasks for problem-solving

Though larger language models require more processing power, they excel at handling requests that demand a deeper understanding and sophisticated cognitive processing.

Efficient deployment strategies:

- Utilize SLMs for simpler, less demanding tasks to reduce the load on computing resources and achieve faster turnaround times.

- Employ LLMs for routing and handling complex queries, integrating them as “intelligent routers” within your computational framework.

- Consider leveraging specialized models for operations on the edge or directly on devices, where connectivity to cloud services is not necessary.

Understanding the trade-offs:

- Computational Cost vs. Depth of Intelligence: While SLMs are less resource-intensive, LLMs bring a higher level of intelligence that currently remains unmatched by their smaller counterparts.

- Scalability and Accessibility: SLMs may offer benefits by enabling local computation, benefiting environments where cloud access is limited or when latency is a concern.

- Ongoing Evolution: The gap between SLM and LLM capabilities may persist as both continue to advance, with larger models potentially maintaining their lead in complex cognitive tasks.

Practical applications:

By aligning the strengths of each model type with the demands of your project, you can craft a tailored approach that optimizes computational resources and achieves your objectives effectively. Whether looking for a lightweight solution for content generation or a robust system for complex reasoning, your choice of language model plays a pivotal role in the success of your endeavors.

Further Information:

- For insights into the promising potentials of SLMs such as Phi-3, feel free to read more.

- To discover how Azure AI provides tools to create safer applications with both SLMs and LLMs, you can learn more.

- For a deep dive into the Phi-3 technology and its application on mobile devices, read the technical report.

Common queries about phi-3 language models

Accessing Phi-3 on Hugging Face

To start using the Phi-3 model on the Hugging Face platform, simply visit their official website. Look for Phi-3 in the model directory, where you can explore its features and find instructions for implementation.

Differences Between Mini, Small, and Medium Phi-3 Models

- Phi-3 Mini: Streamlined for basic tasks, fewer parameters.

- Phi-3 Small: Enhanced capability, balance of size, and function.

- Phi-3 Medium: Most sophisticated, highest number of parameters for complex applications.

Locating phi-3 model source code

The source code for the Phi-3 language model is available through Microsoft’s official repositories. Visit their platform and search for Phi-3 to find the codebase and associated documentation.

Typical uses for Phi-3 and similar small language models

Small language models like Phi-3 have a variety of applications:

- Language translation

- Text summarization

- Chatbot development

- Assisting with code autocompletion

Research publication on phi-3

The research paper detailing the Phi-3 language model has been published on arXiv, where academia and industry professionals can review the technical aspects and results of the model’s performance.

Downloading the Phi-3 small model for projects

You can download the Phi-3 small language model for personal or professional projects from the Microsoft Azure AI Model Catalog or the Hugging Face platform. The model is available for integration into your applications.