Have you ever wondered how AI can generate human-like text?

Large Language Models, or LLMs, are the brains behind this incredible capability.

These models are designed to understand and generate natural language, making them useful for tasks like translation, content creation, and code generation.

But what exactly is an LLM?

Defining LLM in AI

An LLM is a machine-learning model trained on immense amounts of text data. This training allows it to predict and generate human-like text based on the patterns and structures it has learned.

Think of it like a super-smart text predictor that can craft coherent and contextually relevant responses.

In the next sections, you’ll learn more about how LLMs are trained, their applications in the real world, and the challenges they face.

Overview of language models

Language models are systems that predict and generate language. They aren’t limited to just text. You might see them in action when your smartphone suggests autocomplete words.

These models work by understanding patterns in data. For example, they analyze how words and phrases typically follow each other.

There are many types of language models, but the large ones are the most powerful.

They are trained on huge datasets. This helps them learn better and become more accurate.

Because of this, they can handle a wide range of tasks. You will find them in chatbots, translation services, and even in game storylines.

Understanding “Large Scale”

The ‘large’ in Large Language Models refers to the size and scope of data used for training.

These models use vast datasets, often billions of words. This makes them highly sophisticated.

For instance, models like GPT-3 are trained on diverse data sources, from books to online articles.

The scale of these models also impacts their performance.

They can generate text that is coherent and contextually accurate.

They handle tasks ranging from simple question-answering to complex content creation.

Due to their large-scale nature, they require significant computational resources. Despite this, their applications are growing in fields like customer service and content writing.

This link from IBM provides more on their significance.

Applications of LLMs

Large language models (LLMs) have revolutionized the field of AI by enabling advances in various domains. Key applications include natural language processing, machine translation, and content generation.

Natural language processing

In natural language processing (NLP), LLMs excel in understanding and generating human language.

These models can analyze text data to recognize patterns and predict next words or sentences.

This capability is used in spam detection, sentiment analysis, and chatbots.

Chatbots, powered by LLMs, handle customer service queries efficiently, providing quick and accurate responses.

Sentiment analysis tools evaluate customer feedback to gauge public opinion.

LLMs also improve text summarization, extracting key points from large documents.

Machine translation

LLMs significantly enhance machine translation, allowing for more accurate and fluent translations between languages.

Using vast amounts of multilingual data, these models learn linguistic nuances and context, resulting in better quality translations.

Tools like Google Translate leverage LLMs to provide real-time translations, improving communication across different languages.

Businesses use these tools for global operations, breaking language barriers in international markets. Improved translation fosters better understanding and collaboration.

Content generation

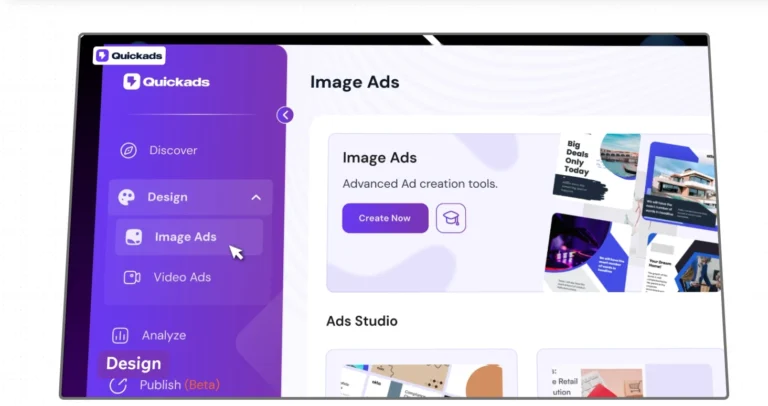

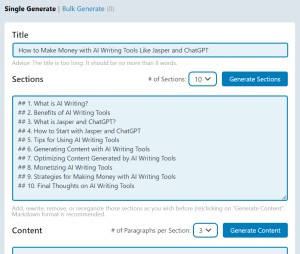

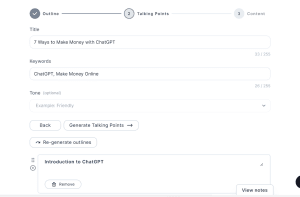

LLMs are also used in content generation, automating writing tasks and crafting creative content.

They create articles, reports, and even fiction using prompts and context provided by users. This speeds up content creation and reduces manual effort.

Marketing teams use LLMs to generate product descriptions, blog posts, and social media content.

They ensure consistency and quality, enhancing engagement. Content generation tools powered by LLMs enable writers to focus on strategic planning and creativity.

Technical foundations

Large language models (LLMs) are advanced AI systems that process and generate human-like text. They rely on neural networks, specialized training methods, and specific model architectures to function effectively.

Neural networks and deep learning

Neural networks are the backbone of LLMs. These networks consist of layers of interconnected nodes, or neurons, that process data.

Each neuron applies a mathematical function to its inputs and passes the output to the next layer.

Deep learning involves using neural networks with many layers, known as deep neural networks.

This depth allows the model to learn complex patterns in large datasets.

In these models, each layer learns different features of the data.

For example, earlier layers might learn simple features like edges in images, while later layers learn more complex features like shapes or objects.

The power of neural networks in LLMs comes from their ability to learn from vast amounts of data.

This learning is supervised, meaning the model is trained on labeled data.

With enough layers and data, neural networks can learn to understand and generate human language effectively.

Training and data sets

Training an LLM requires immense computational resources and large datasets.

The training process involves feeding the model large amounts of text data and adjusting its parameters to minimize errors.

Pre-training is the first phase. Here, the model is trained on a large corpus of text using unsupervised learning.

It learns the structure and patterns of the language.

The goal is to predict the next word in a sentence, allowing the model to understand context.

Fine-tuning follows pre-training. This phase adjusts the pre-trained model using a smaller, task-specific dataset.

Fine-tuning improves the model’s performance on specific tasks like translation or question answering.

It’s crucial to use diverse and high-quality datasets.

The quality of the training data directly affects the model’s performance.

Larger and more varied datasets help create more robust and generalizable LLMs.

Model architecture

LLMs use specific architectures that determine how the network is structured.

One common architecture is the transformer.

This model uses attention mechanisms to focus on different parts of the input data efficiently.

Transformers have revolutionized LLMs. They process input data simultaneously rather than sequentially, making them faster and more effective.

Attention mechanisms within transformers allow the model to weigh the importance of different input parts, improving understanding and prediction.

Another key component is the number of parameters in the model.

Parameters are values the model adjusts during training.

More parameters generally lead to better performance but require more computational resources.

The architecture also includes components like layers, attention heads, and feed-forward networks.

Each element has a specific role in processing data and generating text.

The design and complexity of the architecture largely influence the capabilities of an LLM.

Challenges and limitations

Large Language Models (LLMs) in AI have made significant strides, but they come with notable challenges. You need to be aware of ethical concerns, the massive computational resources required, and inherent biases.

Ethical considerations

LLMs can generate vast amounts of content, which raises ethical questions.

They can create fake news or plagiarize existing content. This makes it crucial to monitor their outputs responsibly.

Misuse can lead to the spread of misinformation.

Ensuring that LLMs are used ethically requires constant oversight and regulation.

Privacy is another concern. LLMs can inadvertently reveal sensitive information.

Safeguards are necessary to prevent data breaches.

Balancing innovation with responsibility is vital for the ethical use of these models.

Computational resource requirements

Training LLMs demands extensive computational power.

This includes powerful GPUs and vast amounts of electricity.

Such requirements can make LLM use expensive and limit access to only well-funded organizations.

The environmental impact is significant. The energy consumption for training large models can be enormous.

Efficient usage and advances in technology are needed to mitigate this impact.

Smaller firms might find these costs prohibitive.

Moreover, running these models requires continuous maintenance.

This adds to the operational costs. Efficient resource management is key to sustaining LLM operations.

Model bias

LLMs often exhibit biases based on the data they are trained on.

These biases can reflect societal prejudices.

Addressing model bias is a major challenge. Biased outputs can lead to unintended consequences.

Users might see biased content that reinforces stereotypes.

It is essential to implement unbiased training data.

Regular audits of model outputs help to identify and correct biases.

Bias mitigation techniques are evolving. They must be part of the LLM development process.

By focusing on fair and diverse datasets, the goal is to reduce the risk of biased content.

LLM FAQs

Here are answers to common questions about large language models (LLMs). Learn about their functions, applications, differences from other technologies, and roles in AI.

How does an LLM function within the field of AI?

LLMs are a category of AI models designed to process and generate natural language.

They function by analyzing vast amounts of text data to predict and generate words based on context.

These models use multiple layers of neural networks to understand language patterns. Learn more about LLMs.

What are some examples of applications for LLMs?

LLMs have many practical uses.

They are used in chatbots, text generation, machine translation, and summarizing content.

They can even create image descriptions from text.

These models aid in various industries by providing more efficient and accurate text-based tasks.

Can you explain the differences between LLMs and NLP technologies?

LLMs and NLP technologies both deal with human language, but there are distinctions.

NLP includes a wide range of methods for processing language, while LLMs specifically focus on using large datasets and deep learning to predict and generate text.

NLP might include rule-based systems, whereas LLMs rely on vast data and neural networks. Read about AI, ML, DL, and LLM.

In what ways do GPT models relate to LLMs?

GPT models are a specific type of LLM.

They are designed by OpenAI and use a transformer architecture to generate human-like text.

GPT models predict the next word in a sequence, making them highly effective for various language tasks.

They represent some of the most advanced applications of LLMs.

What roles can LLMs play in enhancing conversational AI platforms?

LLMs enhance conversational AI platforms by improving the accuracy and fluency of generated responses. They help chatbots understand and reply to user queries more naturally.

This makes interactions more human-like and efficient, enhancing user experiences on these platforms